Problem Solving With Time vs Tokens

How business will change as AI models become better, faster, cheaper

Hi there,

Today I’m going to write about how I’m thinking business will change as AI models become better, faster, and cheaper. I’ll explore problem solving using time vs using tokens, the role niche knowledge and trade secrets will play in value creation, and how I’m using AI.

OpenAI is rushing to stay ahead in the generative AI race, where competitors including Google, Anthropic and Elon Musk’s xAI are investing heavily and regularly rolling out new products. Late last month, OpenAI closed a $40 billion funding round, the largest on record for a private tech company, at a $300 billion valuation.

Big news this week included OpenAI releasing powerful new o3 and o4-mini models and CNBC reported that OpenAI are in talks to buy vibe coding IDE Windsurf. Over at Global Custody Pro I wrote about how everybody wants a platform as earnings season kicked off for the major global custodians.

Part 1: Time vs. Tokens

AI dramatically cuts time for routine tasks like summarising documents, coding, generate yet another anime-style image, and drafting emails - turning hours of effort into minutes.

With the rise of agentic AI and tools like Deep Research which most leading providers have implemented, entry-level research and analysis tasks can now be pushed to these tools for the sort of overviews a graduate or junior analyst may have been tasked with in a previous era.

You can now solve entry-level problems now in two ways: paying for time or paying for AI tokens. Some more complex tokens can even be solved through AI with skilled technical assistance along the journey.

However, this shift comes with trade-offs. You still need to:

Prepare clean data

Build integration systems

Verify outputs for accuracy and bias

AI redistributes effort rather than eliminating it completely. This balance works best for repetitive tasks needing slight variations or exploratory work where failure costs little.

Over-reliance creates risks:

Critical thinking skills may decline

Errors can scale rapidly

Data privacy and cybersecurity concerns emerge

Human expertise remains essential for strategic planning, coaching, and negotiations: areas requiring deep domain knowledge, ethical judgment, and empathy.

The trend towards pushing tasks to token consumption via APIs is accelerating though. Automated systems already manage large parts of logistics and warehousing. In a few years, hyper-capitalism will demand that agent-to-agent interactions will handle entire business functions and even make decisions to maximise profits through contract renegotiation, restructuring, factory relocation and complex cross-border corporate structuring arrangements.

As AI token costs replace labor costs, maintaining high human staff levels may become financially unsustainable unless protected by regulation or other guild-like protections.

There could even be a luxury good type change in how we see human labour - did you use a commodity interior design model when you renovated your beach house or an elite human interior designer who only does one renovation a year for “the right client”?

Part 2: Niche Knowledge and Trade Secrets

As AI token costs become cheaper, three knowledge layers emerge:

Common data anyone can access

Repeatable processes AI can learn quickly

Tacit expertise - subtle insights and relationships that don't make it into training data

The first two layers rapidly become commodities, while the third becomes the main source of competitive advantage.

Smart companies will:

Identify which parts of their work fall into each layer

Focus on strengthening their tacit knowledge

Protect their trade secrets as a business priority

Build custom AI models on their proprietary data

For example, an engineering firm could train a specialised model on their entire project history, connecting it to all their systems and digitised records so "the way we do things" becomes embedded in AI tools.

This shift creates new opportunities and threats:

Teams using coordinated AI agents can bypass traditional outsourcing

Large service providers face competition from smaller, specialised firms

Data security becomes essential, not just a compliance requirement

Employee value shifts toward those who can direct AI systems while applying deep domain expertise

The greatest disruption may come from nimble startups using AI to deliver what established firms do, but faster and cheaper. Once these newcomers gain client trust, traditional white-collar business models will face serious challenges.

As the capabilities of reasoning models and agents grows, the “moat” of tacit knowledge will also reduce as co-operating and competing agents “figure out” hard-won niche knowledge through entrepreneurial trial-and-error at speed.

Part 3: How I'm Using AI

I'm getting great value from AI in several ways:

Speaking my ideas aloud while Gemini converts them to organised notes

Getting feedback on thin reasoning and logical gaps in said notes

Using voice chat for my child's random questions

Identifying plants and birds with photo recognition

Solving technical problems by pasting error messages

Never using traditional search engines anymore

I'm also learning through hands-on projects:

Building a risk tool for my own data journalism

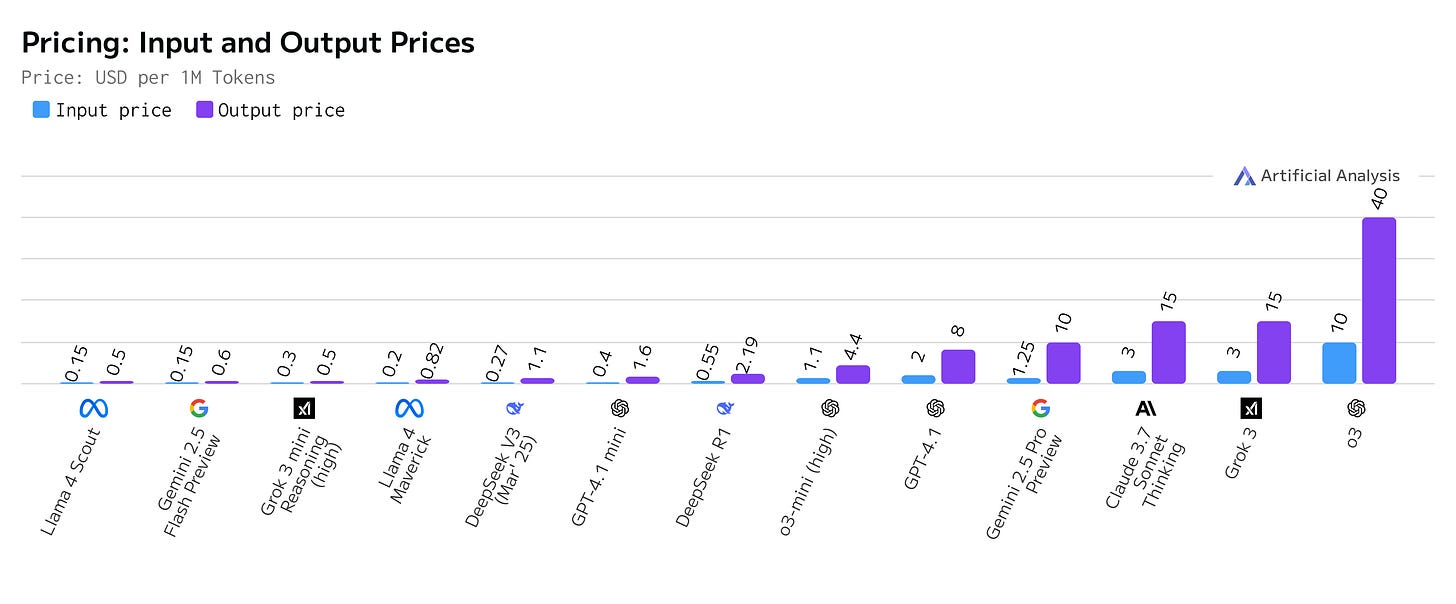

Learning to better manage AI costs by using my own API keys

Working through technical debugging challenges that stretch my skills

Though progress isn't very smooth, I've learned about:

Rust programming

Backend API development

Unit testing for APIs

Data visualisation with D3

Frontend integration drama

More effective prompting techniques

As AI becomes more accessible, it's going to change how businesses compete. A major impact will be enabling non-technical experts to create their own tools using natural language commands.

Success in this new environment requires:

Strategic application of AI

Protection of proprietary knowledge

Developing skills that combine domain expertise with leveraging AI

While caution and skepticism is still obviously needed with AI outputs, I find the constant dismissal of these powerful tools by some folks to be increasingly frustrating.

We’re still at the outset of the journey, and as these models get better, faster, and cheaper every few weeks, more and more tasks are better pushed to a model than given to a human.

This is what time vs token means in the end - it will increasingly become a waste of time to even bother having human specialists in some domains of expertise.

This drive to automate and push things to the machines is going to change the way we live, work, and do business and there’s going to be a lot of social issues downstream of this feasible set of outcomes.

I hope you enjoy the Easter weekend.

Regards,

Brennan