Hi there,

This week I’m going to write about AI-2027, the latest AI prediction story taking the internet by storm, and check in on Windsurf AI vibe coding for a new side project.

Part 1: What is AI 2027?

AI prediction is hard, but AI-2027 is a recent attempt at sketching out how AI might evolve over the next few years.

The authors are AI researchers Daniel Kokotajlo, Eli Lifland, Thomas Larsen, and Romeo Dean, while Scott Alexander helped improve the writing.

In other words, if ordinary human science would have run up against diminishing returns and physical limits after 5 -10 years of further research, then AIs with a 100x multiplier would run up against those same diminishing returns and limits after 18.25–36.5 days of research.

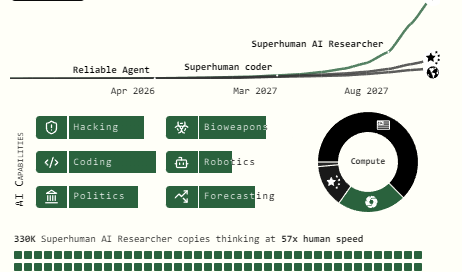

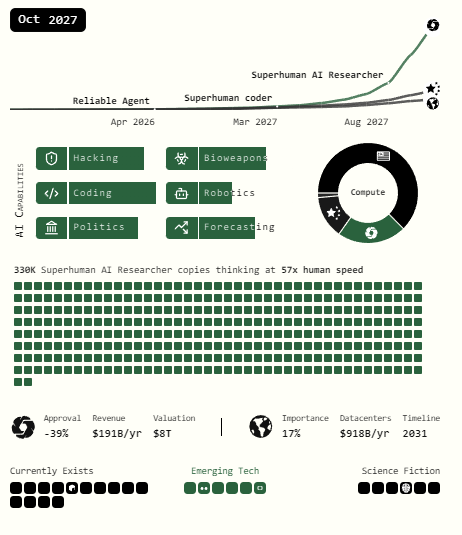

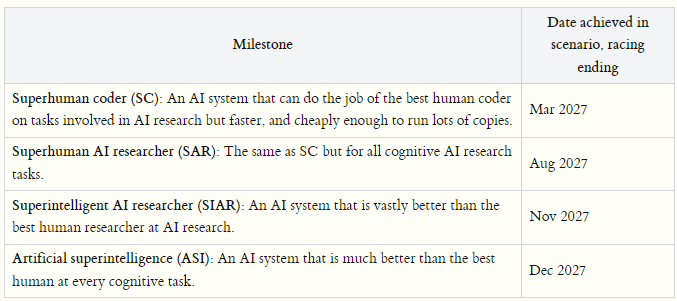

Here are some of their forecasts for capability in 2027:

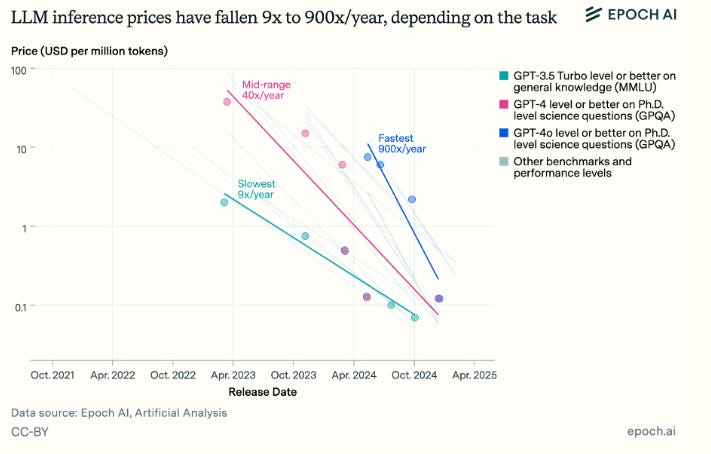

And look how fast costs are falling. It’s expensive to use the latest models but the trend is clear: the value a human will need to add to be employable in 2-3 years time is skyrocketing.

I think this trend is only going to accelerate with the improved capability of open source models over time and the incentive for a Chinese AI firm to invest heavily in R&D.

I won’t spoil the “choose your own ending” narratives - but recommend reading AI 2027 all the way through especially if you’re still sitting in the “AI is just a fad” frame of mind.

The same people I know who consistently missed or were late to adopt every major technology trend since the 1990s are now dismissing artificial intelligence's potential.

History is repeating itself, and they're likely to be left behind again. Those who fail to recognize and adapt to this transformation won't make it (NGMI).

Part 2: Windsurf AI Vibe Coding

As part of having fun with AI, I started to build a “Minimum Viable Product” for a risk analysis tool to help on the data journalism side of Global Custody Pro.

Over the course of a few days, I’ve been able to build out a basic extract-transform-load process to get data from csv to json, a Rust backend, a basic frontend, and a lot of unit tests.

There are serious challenges, especially with debugging errors and loss of context leading to model mistakes.

An example is model safety features requiring manual execution of commands, or guardrails stopping low risk flows of actions being completed.

Another example is the context window only looking at a small part of a file and not the entire file - and proposing a change that doesn’t align to what’s been previously executed.

This isn’t a major issue for me as I’m just building a fun side project, but I can see how you need to have a reasonable level of technical ability to get value out of these tools beyond building a calculator app or the like.

I’m fortunate I had a structured approach to setting up the initial prompts and architecture and making sure all the basic project hygiene was set up.

The main “bad” decision I think I made was choosing Gemini Pro 2.5 instead of Claude Sonnet 3.7 (Thinking) - but I wanted to really test drive the latest Gemini model.

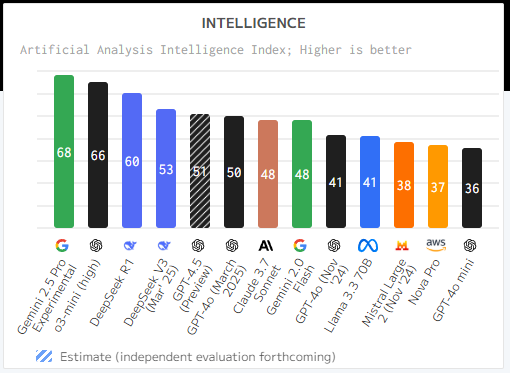

I think the challenges I had with this model had more to do with Windsurf AI rate limits and token context constraints and my own skill than the model itself - which is leading the Artifical Analysis AI model benchmarks.

Yet - as a non-developer - I am almost at a point where I can demo the basic end-to-end functionality I want and have a clear product vision for building this risk tool out further over time.

My plan is literally just to grind through and use the Windsurf credits I paid for until it works how I want it.

The base case is I’ll be able to use it to support data journalism - the bull case is I can expand functionality further.

This is a specific data analysis problem I would previously have used R Studio for. It would never have occurred to me to try building a web app for it.

There is no bear case - and that’s the main insight from this experiment. From the debugging process alone I have learned a ton about putting together a project from the “developer perspective”.

At the intersection of AI prediction and hands-on experimentation lies the real story: AI is already creating economic value that wouldn't otherwise exist.

Whether it's researchers forecasting 2027 capabilities or my weekend project with Windsurf AI, we're witnessing the expansion of what's possible.

For $200 in AI credits plus my time investment, I've created something worth an estimated $10,000 in traditional developer day-rate costs and gained valuable technical insights along the way.

This isn't just cost savings; it's net new value creation that expands the economic pie.

As AI models especially agentic AI ones continue their rapid advancement, expect this value multiplier effect to accelerate across all business domains, turning previously impractical ideas into accessible realities.

Have a great weekend,

Brennan